Can Transformers Learn Logical Reasoning from Scratch?

:::info Authors:

(1) Emmanuel Abbe, Apple and EPFL;

(2) Samy Bengio, Apple;

(3) Aryo Lotf, EPFL;

(4) Colin Sandon, EPFL;

(5) Omid Saremi, Apple.

:::

Table of Links

Abstract and 1. Introduction

1.1 Syllogisms composition

1.2 Hardness of long compositions

1.3 Hardness of global reasoning

1.4 Our contributions

-

Results on the local reasoning barrier

2.1 Defining locality and auto-regressive locality

2.2 Transformers require low locality: formal results

2.3 Agnostic scratchpads cannot break the locality

-

Scratchpads to break the locality

3.1 Educated scratchpad

3.2 Inductive Scratchpads

-

Conclusion, Acknowledgments, and References

A. Further related literature

B. Additional experiments

C. Experiment and implementation details

D. Proof of Theorem 1

E. Comment on Lemma 1

F. Discussion on circuit complexity connections

G. More experiments with ChatGPT

Abstract

Can Transformers predict new syllogisms by composing established ones? More generally, what type of targets can be learned by such models from scratch? Recent works show that Transformers can be Turing-complete in terms of expressivity, but this does not address the learnability objective. This paper puts forward the notion of distribution locality to capture when weak learning is efficiently achievable by regular Transformers, where the locality measures the least number of tokens required in addition to the tokens histogram to correlate nontrivially with the target. As shown experimentally and theoretically under additional assumptions, distributions with high locality cannot be learned efficiently. In particular, syllogisms cannot be composed on long chains. Furthermore, we show that (i) an agnostic scratchpad cannot help to break the locality barrier, (ii) an educated scratchpad can help if it breaks the locality at each step, (iii) a notion of ‘inductive scratchpad’ can both break the locality and improve the out-of-distribution generalization, e.g., generalizing to almost double input size for some arithmetic tasks.

1 Introduction

Transformers [1] have proved to have strong learning capabilities, in particular in applications with large amounts of text, image, or audio data [2, 3]. Some reasoning capabilities are also notable in these settings, however, the picture deteriorates when the target complexity increases, such as in tasks involving more advanced forms of ‘reasoning’ [4, 5, 6, 7, 8, 9, 10]. While reasoning is present at all levels of learning, it is pushed to a higher level in tasks such as logic or mathematics, where ‘learning by seeing enough representative examples’ is precluded by the more combinatorial nature of the task. For such tasks, combining learned concepts in order to extrapolate seems necessary, as for the length generalization problem [11]. Current Transformer-based models exhibit difficulties learning at scale on such tasks. Can we understand why and what is missing? We start with a specific motivational example before expanding the discussion to more general tasks.

1.1 Syllogisms composition

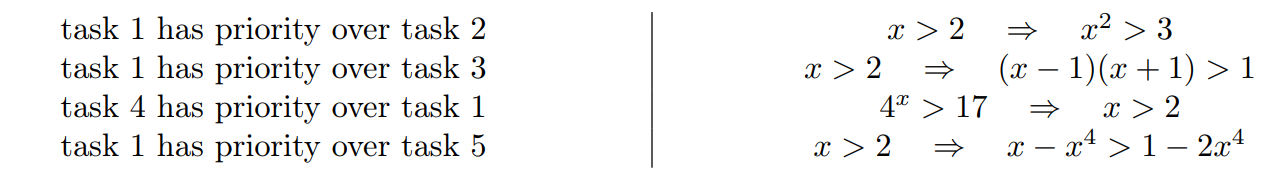

Reasoning relates to the process of inferring new knowledge by composing efficiently some prior knowledge. A basic notion of reasoning is syllogism composition, e.g., inferring a ⇒ c from a ⇒ b and b ⇒ c. For instance, one may be given a set of implications:

\ \

\ \n and without additional background information, one would like to know using logic whether

\ \

\ \ The goal here is to identify whether a syllogism can be composed[1] by prior ones. Simplifying the input format, the above correspond to identifying paths in token sequences describing the directed edges of an underlying graph, i.e., whether there is a directed path 3 → 5 (case 1) or 4 → 2 (case 2) using the directed edges {(1 → 2),(1 → 3),(4 → 1),(1 → 5)}.

\ This type of task is nontrivial for current LLMs, and we refer to Appendix G for some further experiments with GPT models.[2] Note that here we are not interested specifically in solving a graph-based task, but rather in understanding when Transformers can compose and more generally how far they can do so. We would like to identify particular measures on the data distribution (e.g., syllogisms topologies in the above example) that capture when Transformers can efficiently learn.

1.2 Hardness of long compositions

Consider the previous syllogism composition task where implications are drawn on a graph with 24 edges drawn randomly over 24 vertices. Picking vertices at distances 1 to 4 for the connected case and picking disconnected vertices uniformly at random lets a Transformer achieve a test accuracy of more than 80% after about 2K iterations. However, does this mean that the model has learned to compose syllogisms, or has it found shortcuts, e.g., based on node degrees, to guess the implications often enough? In Appendix B.1, we provide empirical evidence supporting the latter. Motivated by this issue and to preclude spurious correlations, we consider the following distribution.

\ Definition 1. For n ≥ 1, consider the binary classification task with equiprobable classes defined by

\

-

Class 1: a graph uniformly drawn on 2n vertices with two disjoint cycles of length n and a pair of vertices in disjoint cycles queried for path;

\

-

Class 2: a graph uniformly drawn on 2n vertices with one cycle of length 2n and a pair of vertices at distance n queried for path.

\ The input of this task is the graph edge set with the queried vertices. The label is 0 if the two queried vertices are not connected (Class 1) and 1 if they are (Class 2). We refer to this task as the ‘cycle task’. See Figure 1a for an illustration.

\ Figure 1b shows that the learning complexity increases ‘exponentially’ as n grows using GPT2-style Transformers of more than 10M, 25M, 85M parameters; e.g., the 10M model fails to learn for n ≥ 7 in 100K iterations. Why is that? Can a larger scale further help here?

\ Can a large (poly-size) Transformer learn the cycle task when n gets large? If not, why so?

\ A challenge for the cycle task is that there is no clear ‘low-complexity pattern’ in the input representation that indicates whether there are 1 or 2 cycles. No simple statistics based on degrees, edge counts, or finite motif counts that can tell if the vertices are connected or not. One has to consider at least n edges in order to get any correlation with the presence of a path. In other words, the task requires a ‘global reasoning’ involving a ‘large’ number of input tokens and this seems hard for Transformers.

\

:::info This paper is available on arxiv under CC BY 4.0 license.

:::

\n

You May Also Like

RWA Crypto Projects Gain Momentum with Chainlink, VeChain, and Avalanche Surging in Engagement

XRP Holders Can Mint Stablecoins via Enosys Loans on Flare